Character Encoding Terminologies

Down to the root, the computer could only recognize number in bit sequence. It relies on character encoding scheme to recognizes characters/symbols from human's world. Before we dive into programming with Unicode, it is important to know the character encoding terminologies in order to understand how it works when come to programming in Java.General Character Encoding

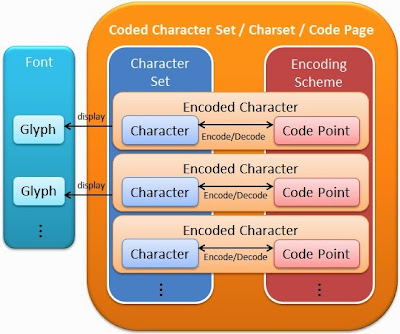

Character is a generic term which representing a normal character in any languages, symbol, control character, formatting character, and etc. It is an abstract form which just a piece of textual data without any specific appearance or style. In any general character encoding, each of the characters is encoded into a unique integer value called as Code Point. Once the bonding between character and code point is established, this relationship is never changed and hence called as Encoded Character. Every character encoding scheme can only cope with certain Code Space, which is the range of integer value or total number of code points, depend on the bit-length that they are supporting.

| Encoding | Bit length | Code space size |

|---|---|---|

| ASCII | 7 | 128 |

| WINDOWS-1252 | 8 | 256 |

On the other hand, the code space has determined the maximum number of distinct characters that the corresponding Character Set could contain. The term of Character set is a standard. It defines and contains only certain of characters. There are a lot of character sets out there. For example, Windows has various character sets specifically for different languages such as Arabic (Window 1256), Korean (Windows 949), Western European (Windows 1252) and etc.

CharSet basically is the short form of Coded Character Set, a set of characters which are encoded by specific character encoding scheme. Therefore, charset name most of the time same as character encoding name. Code Page is referring to the same thing, but this term is normally used by computer vendors. For example, UTF-8 has code page numbers 1208 at IBM, 65001 at Microsoft, 4110 at SAP.

What displayed on the screen is called Glyph Image. Font is a collection of glyphs with certain format and style setting. A font type does not necessary to cover all characters from a character set. For example, Arial font is not able to render Japanese characters because it does not contain any glyph for Japanese characters. What is probably rendered on the screen is just a square box. This means the textual data is there but no glyph to depict and render it on the screen. The Japanese characters will be rendered correctly when we use Arial Unicode MS instead.

Unicode Encoding

Universal Character Set (UCS) is an International Standard(ISO/IEC 10646) that defines a standard set of characters that cover all characters from all around the World. It is developed in conjunction with Unicode standard, and hence it's size is determined by Unicode encoding code space. Initially, Unicode was only intended to covers all characters from all modern languages. The Unicode-based encoding scheme at that time was USC-2 (2-byte Universal Character Set), which is 16-bit and it has 65536 code space. However, since Unicode 2.0, UCS-2 was superseded by UTF-16 and Unicode code space is greatly extended to 1,114,112 by using surrogate characters mechanism. In other words, there are over 1 million code points or character placeholders available for characters in USC. USC currently contains nearly one hundred thousand abstract characters and there is still a lot of room for growth.

In term of the code point, Unicode taking the same approach, but it treats the code point as a layer between the character and the encoded value. Code point in Unicode has the notation U+following by hexadecimal numbers. Besides, Unicode also defines properties such as Name,Category, Script, Block, different Binary type and etc for each character. Each character is still mapped to a unique code point. Code point then is encoded by the Unicode-based character encoding scheme. The well-known encoding such as UTF-8, UTF-16, and UTF-32. Different Unicode encoding would encode the same code point into different bytes sequence(represented in hexadecimal format) with variable length of Code Unit. Example as below.

| Characters | Unicode Name | Code point | UTF-8 Code Units | UTF-16BE Code Units | UTF-32BE Code Units |

|---|---|---|---|---|---|

| a | Latin Small Letter A | U+0061 | 61 | 0061 | 00000061 |

| © | Copyright sign | U+00A9 | C2 A9 | 00A9 | 000000a9 |

₂

| Subscript two | U+2082 | E2 82 82 | 2082 | 00002082 |

| 𝅘𝅥𝅮 | Musical Symbol Eighth Note | U+1D160 | F0 9D 85 A0 | D834 DD60 | 0001D160 |

A question to wrap up. UTF-16 could encode over 1 million code points, how about UTF-8? It probably could handle half of code space of UTF-16? The answer is No, UTF-8 also having the same code space as UTF-16. I hope you understand why.

Related topics:

Unicode Support in Java

Encoding and Decoding

Endianness and Byte Order Mark (BOM)

Surrogate Characters Mechanism

Unicode Regular Expression

Characters Normalization

Text Collation

References:

http://en.wikipedia.org/wiki/Character_encoding

http://en.wikipedia.org/wiki/Code_point

http://en.wikipedia.org/wiki/Code_page

http://en.wikipedia.org/wiki/Universal_Character_Set

http://en.wikipedia.org/wiki/Unicode

http://www.unicode.org/glossary/

http://www.bobzblog.com/tuxedoguy.nsf/dx/multilanguage.pdf/$file/multilanguage.pdf

http://illegalargumentexception.blogspot.com/2009/05/java-rough-guide-to-character-encoding.html

No comments:

Post a Comment