Buffer Introduction

Input/Output or in short form, I/O is meant for data or signal transmission. Traditional Java IO, java.io.InputStream and java.io.OutputStream has been fulfilling this purpose, but they leave out the raw binary data in a fixed size byte array. While the byte array is treated as data container or temporary staging area but it is up to us to implement the way in manipulating the byte array. Direct manipulating the byte array is always not a wise approach especially when data involve multi-bytes characters. Read more to know why in my other post Encoding and Decoding.java.nio.Buffer is one of the key abstractions of Java NIO that was introduced since Java version 4. While it retains the same notion of fixed size data container, it also becomes much more efficient with the capabilities below.

- It encapsulates the way of accessing the backing data. Direct or non-direct.

- It provides a rich set of operations for manipulating the backing data by making use of its buffer attributes.

- Primitive type buffer classes make data transferring easier as it is done in respective primitive data type size.

Working with Buffer

In order to working with Buffer effectively, we must understand what and how the buffer attributes work.

Buffer attributes

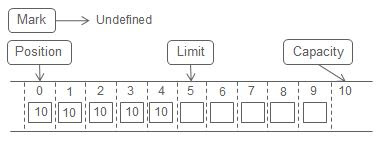

Capacity: The maximum number of elements that the buffer could hold. Capacity is set when a buffer object is created. It cannot be negative and it can never change. Trying to read/write data into an element with the index greater than the capacity will get java.nio.BufferOverflowException and java.bio.BufferUnderflowException respectively.

Limit: The first element index where this element could not be read or written. It indicates the end of data access. Read/write to the element with the index greater or equal to the limit will get java.lang.IndexOutOfBoundException. The initial limit is equal to capacity. It cannot be negative and never greater than capacity.

Position: The first element index where this element would be read or written. It acts like a moving pointer which will increments automatically after every read or write operation. This movement is only in one direction. It cannot be negative and never greater than the limit.

Mark: The index of the current position is captured. So that the position pointer could be reset or revisits to that captured index. Initially, it is undefined. Existing mark will be discarded if the position moves to an index smaller than the mark in any case. Trying to reset to undefined mark will get java.bio.InvalidMarkException.

The rule below simplifies the conditions mentioned above. The relationship between attributes is invariant and always holds.

0 <= mark <= position <= limit <= capacity

The diagram on the left illustrates the default state of buffer attributes in a newly created buffer object.

When a data is written into the buffer, the position will increment automatically in a linear way and always in one direction. Same applies to reading operation.

Creating buffer

Each primitive data type has their respective buffer class except boolean.

None of them can be instantiated directly. They are all abstract classes but we could create specific primitive type buffer object by calling the static factory method wrap() or allocate().

This method will create a buffer object, which wraps the given existing primitive data array as the backing array. Below is an example of creating a byte type buffer object. You can create other type of buffer object by calling that specific primitive buffer class wrap() method.

Wrapper buffers

wrap(<primitive-data-type>[] array)

This method will create a buffer object, which wraps the given existing primitive data array as the backing array. Below is an example of creating a byte type buffer object. You can create other type of buffer object by calling that specific primitive buffer class wrap() method.

public static void main(String[] args) { ByteBuffer byteBuffer = ByteBuffer.allocate(5); byte[] array = byteBuffer.array(); // Get the backing array array[3] = (byte) 30; // Modify on the original array printBytes(byteBuffer); // Print buffer's data content byteBuffer.put(4, (byte)90); // Modify buffer's data content printBytes(array); // Print original array } public static void printBytes(ByteBuffer byteBuffer) { System.out.print("Print buffer's data content: "); byteBuffer.clear(); while (byteBuffer.hasRemaining()) { System.out.printf("%d ", byteBuffer.get()); } System.out.println(""); } public static void printBytes(byte[] bytes) { System.out.print("Print original array: "); for (byte b : bytes) { System.out.printf("%d ", b); } System.out.println(""); }

Print buffer's data content: 0 0 0 30 0

Print original array: 0 0 0 30 90

One important point to take note is that, calling the array() method will return the backing array. Modification on this array will cause the backing array in the buffer object to be modified, vice versa.Print original array: 0 0 0 30 90

Direct buffers

allocateDirect(int capacity)

This method only exist in ByteBuffer. The buffer object that is created using this method will attempt to avoid copying the buffer's content to (or from) an intermediate buffer before (or after) each invocation of one of the underlying operating system's native I/O operations. Direct buffer offers better performance compare to non-direct buffer. However, it comes along with drawbacks.

- Higher allocation and deallocation cost compare to non-direct buffer.

- The buffer content could reside outside of the normal garbage collected heap. Hence, its impact upon the memory footprint might not obvious.

Therefore, it is recommended to only use direct buffer when changing from the non-direct buffer to direct buffer giving obvious performance improvement to your application.

View buffers

as<primitive-data-type>Buffer()

These methods also only exist in ByteBuffer. They will create the respective primitive type of view buffer object. Originally, the backing array of the byte buffer object is indexed in term of byte. Turn it to the primitive type of view buffer object allow the backing array to be indexed in term of that specific primitive data size. Below is an example of creating integer view buffer from a byte buffer.

public static void main(String[] args) { ByteBuffer byteBuffer = ByteBuffer.allocate(16); // Write data into buffer. This will take up 4 initial bytes of the backing array. // After writing, the position now is 4. byteBuffer.put(new byte[] {1, 2, 3, 4}); System.out.println("Print byteBuffer info: "); printInfo(byteBuffer); IntBuffer intBuffer = byteBuffer.asIntBuffer(); // create integer view buffer. System.out.println("Print intBuffer info: "); // The backing array of this integer view buffer start at the byte buffer position,

// which is 4. // One int equals to 4 bytes. Hence, the new capacity is 3. printInfo(intBuffer); printIntBuffer(intBuffer); // Change on the integer view buffer backing array will // cause the byte buffer content to be modified. Vice versa. // The buffer attributes of both buffer are independant. intBuffer.put(11); intBuffer.put(22); intBuffer.put(33); printByteBuffer(byteBuffer); } public static void printInfo(Buffer buffer) { System.out.println("> Capacity: " + buffer.capacity()); System.out.println("> Position: " + buffer.position()); System.out.println("> Limit: " + buffer.limit()); } public static void printByteBuffer(ByteBuffer byteBuffer) { System.out.print("Print byteBuffer content: \n> "); byteBuffer.rewind(); while (byteBuffer.hasRemaining()) { System.out.printf("%d ", byteBuffer.get()); } byteBuffer.rewind(); System.out.println(""); } public static void printIntBuffer(IntBuffer intBuffer) { System.out.print("Print intBuffer content: n> "); intBuffer.rewind(); while (intBuffer.hasRemaining()) { System.out.printf("%d ", intBuffer.get()); } intBuffer.rewind(); System.out.println(""); }

Print byteBuffer info:

> Capacity: 16

> Position: 4

> Limit: 16

Print intBuffer info:

> Capacity: 3

> Position: 0

> Limit: 3

Print intBuffer content:

> 0 0 0

Print byteBuffer content:

> 1 2 3 4 0 0 0 11 0 0 0 22 0 0 0 33

Besides ByteBuffer, other primitive type buffer does not provides method to creates direct buffer. However, this can be done indirectly by creating view buffer from a direct ByteBuffer object.> Capacity: 16

> Position: 4

> Limit: 16

Print intBuffer info:

> Capacity: 3

> Position: 0

> Limit: 3

Print intBuffer content:

> 0 0 0

Print byteBuffer content:

> 1 2 3 4 0 0 0 11 0 0 0 22 0 0 0 33

ByteBuffer byteBuffer = ByteBuffer.allocateDirect(16); // direct byte buffer IntBuffer intBuffer = byteBuffer.asIntBuffer(); // direct int buffer

Flipping

flip() - An operation that is normally used to make the buffer ready for reading after writing. In this operation, the limit is set to the current position and then the position is set to zero. If the mark is defined then it is discarded. The diagrams below demonstrate how this operation causing the changing of buffer attribute.

Before flipping.

After flipping.

Rewind

rewind() - An operation that is used to make the buffer ready for re-reading. In this operation, the limit is unchanged and the position is set to zero. If the mark is defined then it is discarded. The diagrams below demonstrate how this operation causing the changing of buffer attribute.

Before rewind.

After rewind.

Clear

clear() - An operation that is used to reset buffer attributes to the default state, hence the buffer is ready for read/write again. Note, this operation will not clear the content. In this operation, the limit is set to the capacity, the position is set to zero, and if the mark is defined then it is discarded. The diagrams below demonstrate how this operation causing the changing of buffer attribute.

Before clear.

After clear

Mark and reset

mark() - An operation that is used to captures and remembers the current position.

reset() - An operation that is used to set the position to the mark. The diagrams below demonstrate how this operation causing the changing of buffer attribute.

Before reset.

After reset.

Compact

compact() - An operation that is used in conjunction with continuous series of buffer reading and writing operation. It serves the situation where buffer content is not completely read, but next writing is starting. In this operation, the bytes between the buffer's current position and its limit, if any, are copied to the beginning of the buffer. That is, the byte at index p = position() is copied to index zero, the byte at index p + 1 is copied to index one, and so forth until the byte at index limit() - 1 is copied to index n = limit() - 1 - p. The buffer's position is then set to n+1 and its limit is set to its capacity. The mark, if defined, is discarded.

public static void main(String[] args) { ByteBuffer byteBuffer = ByteBuffer.allocate(20); byte[][] dataToBeWroteIntoBuffer = new byte[][]{ {10, 20, 30, 40, 50, 60, 70, 80, 90, 100}, {11, 22, 33, 44, 55, 66, 77, 88, 99, 111} }; byte[] dataToBeReadFromBuffer = new byte[5]; for (int i = 0; i <= 1; i++) { System.out.println("Write data into buffer."); byteBuffer.put(dataToBeWroteIntoBuffer[i]); printBytes(byteBuffer.array()); byteBuffer.flip(); System.out.println("Read data from buffer.");

// read partially. Only 5 elements are read.

byteBuffer.get(dataToBeReadFromBuffer);

System.out.println("Compact buffer.");

byteBuffer.compact();

printBytes(byteBuffer.array());

}

}

public static void printBytes(byte[] bytes) {

for (byte b : bytes) {

System.out.printf("%d ", b);

}

System.out.println("");

}

Write data into buffer.

10 20 30 40 50 60 70 80 90 100 0 0 0 0 0 0 0 0 0 0

Read data from buffer.

Compact buffer.

60 70 80 90 100 60 70 80 90 100 0 0 0 0 0 0 0 0 0 0

Write data into buffer.

60 70 80 90 100 11 22 33 44 55 66 77 88 99 111 0 0 0 0 0

Read data from buffer.

Compact buffer.

11 22 33 44 55 66 77 88 99 111 66 77 88 99 111 0 0 0 0 0

The code example above always reads partial content from the buffer and misses out the unread data. compact() in this case, helps to move the unread data to the beginning of the buffer content, and sub-sequence immediate data writing will not overwrite the unread data. Then, the unread data could be read in next reading operation. As you can see from the result above, data 10 to 100 has been fully read out from the buffer.10 20 30 40 50 60 70 80 90 100 0 0 0 0 0 0 0 0 0 0

Read data from buffer.

Compact buffer.

60 70 80 90 100 60 70 80 90 100 0 0 0 0 0 0 0 0 0 0

Write data into buffer.

60 70 80 90 100 11 22 33 44 55 66 77 88 99 111 0 0 0 0 0

Read data from buffer.

Compact buffer.

11 22 33 44 55 66 77 88 99 111 66 77 88 99 111 0 0 0 0 0

References:

http://docs.oracle.com/javase/7/docs/api/java/nio/Buffer.html http://docs.oracle.com/javase/7/docs/api/java/nio/ByteBuffer.html http://howtodoinjava.com/2015/01/15/java-nio-2-0-working-with-buffers/ http://www.javaworld.com/article/2075575/core-java/core-java-master-merlin-s-new-i-o-classes.html http://tutorials.jenkov.com/java-nio/index.html